| Task | Due Date |

|---|---|

| Milestone 1 Submission | Feb 26 2024 |

| Product Identification and Selection | Feb 28 2024 |

| Vendor Identification and Selection | Feb 28 2024 |

| Data Collection | Mar 8 2024 |

| Data Cleaning/Pre-Processing | Mar 17 2024 |

| Milestone 2 Submission | Mar 20 2024 |

| Review Classification (Naive Bayes, Kansei) | Mar 27 2024 |

| Product Classification | Mar 27 2024 |

| Reputation Classification | Mar 27 2024 |

| Exploratory Data Analysis | Mar 31 2024 |

| Milestone 3 Submission | Unknown |

| Model Selection | April 7 2024 |

| Model Testing: | April 11 2024 |

| Complete Final Paper / Milestone 4 | April 17 2024 |

CSCI 5302 - Final Project

1 Introduction

1.1 Abstract

We explore the research performed in Guha Majumder, Dutta Gupta, and Paul (2022), and seek to further it via their recommendations for predicting the perceived usefulness of online customer reviews to potential customers. Our work focuses on the expansion and generalization of their multiple linear regression model. To check the model’s general applicability, we collect additional products and reviews, and do so for the same products from multiple e-commerce websites to examine whether such models are applicable to any platform, or if the models may be platform-specific. Furthermore, we explore use of additional features and coefficients, and use of other prediction and classification models to assess the degree to which a customer review is useful to future customers.

1.2 Concept and Motivation

Customers, when searching for products with specific features and aspects, need sufficient information to make a decision as to whether to procure a specific product. According to research by Guha Majumder, Dutta Gupta, and Paul (2022), if a customer can gather and understand product quality before the purchase, it is considered a search good, while experience goods are those which must be purchased or experienced to evaluate them. When a product is more in the directon of experience vs. search-based, other customers’ experiences can shed light on its features and return on investment than information directly from the vendor can. Having reviews from reliable sources with sufficiently detailed information can enable greater confidence in a purchase, improved customer satisfaction, and smooth the process of ecommerce for customers.

We seek to expound upon the research of (Guha Majumder, Dutta Gupta, and Paul 2022) to explore additional recommended research areas to improve upon and increase the general applicability of the model.

1.3 Our Research Plan

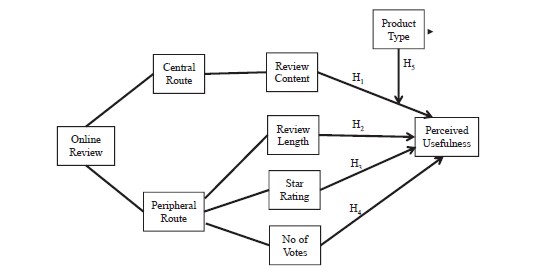

Guha Majumder, Dutta Gupta, and Paul (2022) provided the following summary model for what aspects and features they took into consideration in predicting the perceived usefulness of a customer review in Figure 1.1.

Furthermore, the authors provided the following areas for recommended additional research at the conclusion of their paper:

Expand the number of products beyond 3 items (one search, one experience, one mixed) to better generalize the model.

Explore customer or reviewer metadata for classifying reviewer types to enhance model performance.

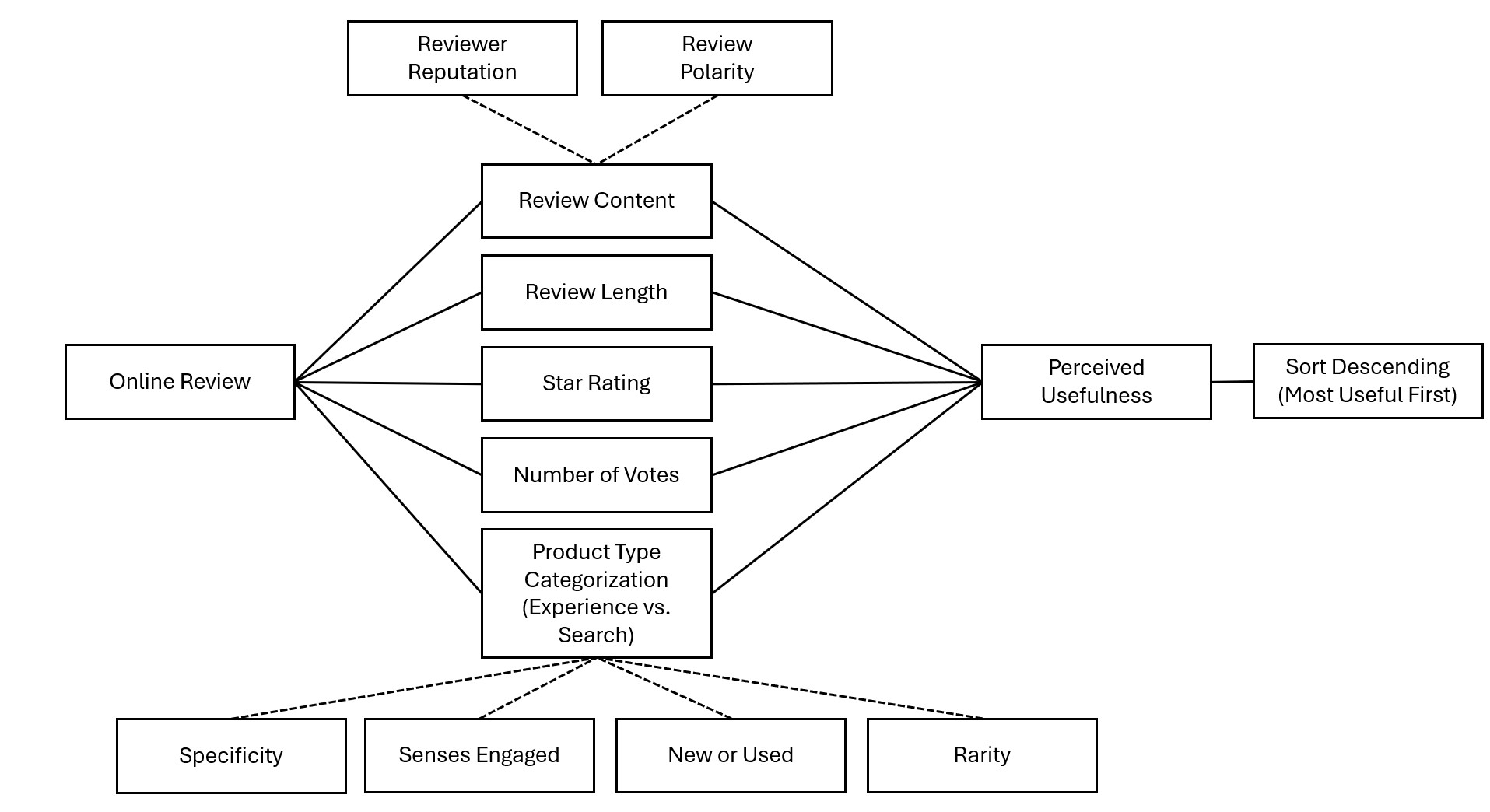

We seek to examine the above two above items, and to explore the possibility of assessing a scale for products to determine the extent to which they are a search or experience-based product. We further seek to inspect additional potential modifiers to the underlying model for statistical and operational applicability; we’ve sought out work from other research teams to identify potential methods we can leverage to pursue these ends.

Determining the polarity of a customer review by employing a classifier such as Naive Bayes.

Using Kansei engineering approaches to convert unstructured product-related texts into feature–affective opinions.

Attempting to assess the reliability of a customer’s review based on star-rating and a ‘sentiment score’ of their textual feedback.

Exploring methods employed within each of combinations of these research efforts, we will pursue potential improvements on the models outlined in Guha Majumder, Dutta Gupta, and Paul (2022). We will examine additional products and product types between multiple e-commerce websites (BestBuy, Target, Amazon). A summary of our explorations are depicted in Figure 1.2.

This is not final, but what we plan to explore. If any metrics or measurements are found to not be significant in analysis and prediction of usefulness of a review, we will seek to explain the relationships (or lack thereof) and modify the final model accordingly. By incorporating these additional measures, we may be able to improve upon and generalize the original model to multiple product types across multiple e-commerce vendors.

1.4 Why It Matters

Feedback from customers can be beneficial to both vendors and consumers, but it is not always ordered by the most informative or beneficial feedback first. Certain features of products, reviews, and reviewers (such as reviewer reliability, review quality, product quality, specificity and detail of the product, amongst others) can impact the usefulness of the feedback on a customer-by-customer basis. Level of detail, star-rating, and number of votes that support the review as being useful to a customer can all help determine its usefulness to other customers. Leveraging metrics and data associated with a product, a review, and a reviewer together may allow for online vendors to improve consumer e-commerce experience, support identificaion of issues with product quality and sales, and enable vendors to adjust practices in product marketing, inventory, and manufacture.

Examining additional product types could support a generalization of the authors’ methodology to other products. Furthermore, the exploration of a sliding scale for search vs. experience-based products can further support generalization and business goals. Producing a reliable scale and methods for classifying a products’ degree of being experienced-based can inform vendors on:

How to best sort product reviews.

Examine what are the most helpful reviews to know the performance of the product alongside customer experience and sentiment.

Adjust the product, its marketing, or future production based upon market efficacy.

Understand the emotions a customer wants to express through a review is crucial as it will affect the “recommendation score” of that particular product or a different one from a similar category.

To contribute in determining this recommendation score, we can use a probabilistic machine learning algorithm like Naive Bayes to determine the polarity (positive, negative, or neutral) of customer reviews.

Typically used for amending product design, Kansei Engineering can be used to incorporate human emotional responses into evaluation of a customer review.

Determine which customer is trustworthy, meaning who has actually purchased the product versus a customer who gave a false review. Based on the ‘customer reputation score’, our aim is to classify customers into groups to judge reviewer reliability. This has two main aspects:

Star-rating score which is a discrete scale that tells the inclination of a customer.

Text review ‘sentiment score’ using NLP that explains customer opinions based on words.

1.5 Literature Survey

Guha Majumder, Dutta Gupta, and Paul (2022)

- Examined multiple-linear regression modeling to calculate the usefulness of an online review based upon type of product (search vs. experience), review sentiment, review star rating, review length, and number of votes for the review as being “useful”. Suggested exploration using larger number of products as well as customer/reviewer metadata.

Hu, Gong, and Guo (2010)

- The proposed system employs a two-step process for opinion mining: identifying opinion sentences using a SentiWordNet-based algorithm and extracting product features from all reviews in the database. This feature extraction function focuses on identifying commonly expressed positive or negative opinions before extracting explicit and implicit product features.

Rajeev and Rekha (2015)

- This paper presents techniques like Opinion mining, feature extraction and Naives Bayes classification for review polarity determination. The authors suggest performing both Objective and Subjective analysis of features by considering qualitative and quantitative features of the data respectively.

Wang et al. (2018)

- Authors have proposed a solution by implementing Kansei engineering and text mining simultaneously which will help customers in decision making process. It helps to categorize reviews into multiple sections and perform text mining by NLP techniques like Sentence segmentation, Tokenization, and POS tagging.

1.6 Research Questions

Can the model from Guha Majumder, Dutta Gupta, and Paul (2022) be generalized with:

larger volume of products and product types from which to mine data?

a sliding scalar multiplier representing the degree to which a product is a “search” (0) or “experience” (1) product?

Adding modifiers to review content based upon:

Customer / Reviewer reliability and reputation?

Review Polarity?

Can the polarity of reviews be judged accurately by using a Naive Bayes classification model? Hu, Gong, and Guo (2010)

- What is the impact of different feature extraction methods (e.g., bag-of-words, TF-IDF) on the performance of Naive Bayes classification model? Wang et al. (2018)

Can products be classified on their degree of being search or experience based by examining product variables such as:

Degree of specificity in the product description? (e.g. level of detail, length, numeric values, descriptive values may suggest the product is more search than it is experience-based)

Whether the product is offered in brand-new condition only, or offered as new, used, or refurbished? (e.g. refurbished products may be more search products than they are experience products)

Which of the 5 senses the product engages? (e.g. engagement of more senses, or engagement of solely specific senses like hearing and vision may suggest more experience-based than search based; examine relationship between search and experience vs. senses engaged)

Item rarity (limited production or unique items vs. bulk-produced items)? (e.g. limited production products may be more experience-based than search-based)

Can newer natrual language processing libraries provide a better fit for Review Content metrics examined by Guha Majumder, Dutta Gupta, and Paul (2022)?

How does sentiment in customer reviews correlate with customer satisfaction metrics or sales figures for a particular product?

Can we categorize customer reviews based on customer experience and sentiment?

Do specific product star ratings tend to incite more reviews, and if so, how does this impact the overall reputation measurement?

Are specific quality descriptors in text-based reviews (e.g., ‘enthusiastic’, ‘disappointed’) strongly associated with certain rating levels, and how does this association affect product reputation?

1.7 Goals / Definition of Success

Replicate similar results to Guha Majumder, Dutta Gupta, and Paul (2022) with similar product types

Expound upon Guha Majumder, Dutta Gupta, and Paul (2022) with additional products, including:

Original products from (paper): Digital Music, Video Game, and Grocery Item

Additional products (Amazon and Target): Furniture Items, Clothing Items, Home Appliances, Books, Cosmetics, Cleaning supplies

Additional Proucts (Amazon, Target, BestBuy): Electronics

Verify goodness of fit of original model

Determing best metrics and/or modifiers for Review Content and Customer Reliability

Achieving similar or better fit than original paper’s modeling; extrapolate to other product types.

Determining strength of correlation metrics (support, confidence, lift) between Naive Bayes’ classifier for review polarity Hu, Gong, and Guo (2010)

Integrate with the model and test if Naive Bayes shows strong correlation metrics.

Compare and contrast the model with and without incorporation.

Successful computation of reputation scores for reviewers

Check applicability across all sites used for determination of validity within the model.

If valid and applicable, execute model against testing data set to ensure it holds.

1.8 Project Schedule / Timeline

Below in Table 1.1, we lay out the major tasks, deliverables, and their respective due dates for this effort.